A revolution in wearable technology is now on the horizon. We’ll soon see a legion of smart glasses that combine AI-powered visual and audio information on demand, delivered with or without your smartphone nearby, all without imposing strange looks or physical discomfort. But the revolution has not yet achieved the “Aha!” place. being built. moments (and maybe a little FOMO).

I came to this conclusion after speaking with Juston Payne, senior director of product management for XR at Google. We chatted shortly after hearing my “Aha!” Had. Moments with a monocular and dual screen Android XR smart glasses development kit. You can read more about this impressive first look here.

What convinced me (and Payne, of course) that this was the next big thing was a personal experience he had in Italy. As Payne explained, his family was on a trip to Rome and “I brought the glasses you just tested (the dual-screen Android device). Payne agreed that this was a good choice given the heat.”

“And then the glasses showed you where to go,” explains Payne, describing the dual-screen XR monocular glasses’ ability to project a Google map, display turn-by-turn navigation and even directions based on your location right in front of your eyes.

“Then my wife, daughter and I followed Clark through the winding streets of Rome to an ice cream shop. Like he lived there. Like he belonged there.”

“Then I thought, ‘Oh wow, things have changed. It is completely different. It is very new. It is very powerful. It is a development that we are very enthusiastic about.

Reach the tipping point

Even “Aha!” moment. » However, the Payne family still has a long way to go. The fear of missing out on the “FOMO” moment is becoming real as the availability and proliferation of Android XR smart glasses activates FOMO among other consumers and drives adoption.

Google and Android XR partner Samsung have already announced partnerships with eyewear makers Warby Parker and Gentle Monster. However, I pointed out that most people probably still buy their glasses from places like LensCrafters and Visionworks. Payne discussed the announcement of a partnership with Kering Eyewear, but remains a purveyor of luxury eyewear.

Payne acknowledged that the vision involves much broader accessibility.

“So the idea is that over time we want to achieve the goal that you mentioned, which is that anyone can walk into a store and buy a smart version based on Android XR and Gemini. And that’s going to be a very exciting future.”

The key, he told me, is making sure the industry offers the right formats and, most importantly, the right prices. Lens options, he added, should “take into account people’s different visual needs.”

An amazing moment for Android XR

Payne and I chatted as Google and its partners prepared to launch Android Day:

While the glasses aren’t ready to debut just yet, they’re the clearest indication yet of Android’s plans and Android XR’s broader ambitions. Payne reminds me that this is “the next computing platform.”

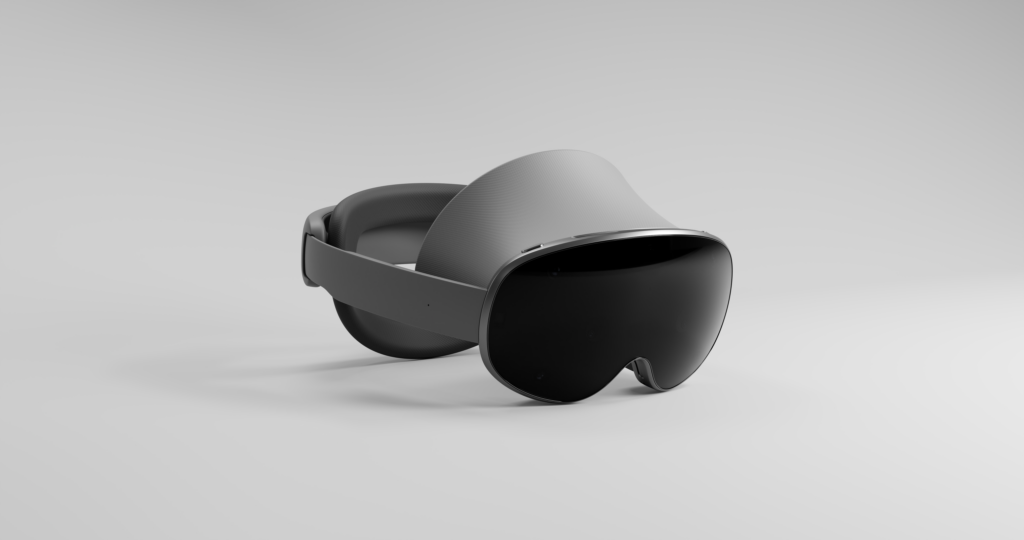

The single- and dual-screen glasses, along with the Xreal Aura (unveiled at the same event) and the Samsung Galaxy XR mixed reality headset I recently tested, now represent the full spectrum of Google’s current ambitions for Android.

Although they are all based on Gemini intelligence, I have noticed differences in my hands-on demonstrations. I was curious to see if Google, for example, dictates display style, from Sony’s micro-OLED and prism screens on the Xreal Aura (which is actually connected to a calculator) to the Galaxy’s wide, high-resolution FoV.

It turns out that while Google doesn’t dictate viewing experiences, it does have a say in the subject matter. There are reasons for all these differences, Payne explained.

“It actually makes sense to think about what kind of use we expect from the products here and then work with local partner companies to find the right solutions,” he said.

Use cases define ads

“Episodically” used products, such as Aura or Galaxy XR, require optical systems that emphasize a wide field of view. With these products, it is acceptable to partially or even completely obscure your actual field of vision, since you often sit and, for example, play games or watch a video.

It is clear that the requirements are different for glasses where it is not possible to remove or significantly darken the lenses. “For this you need a completely transparent lens. For example, a beautiful crystalline lens where the screen is integrated directly into the lens. These become waveguide solutions.”

The idea of Android XR is not only a one-size-fits-all solution, but Payne also envisions a future where people will own more than one XR device. “In fact, we think the same person is likely to have multiple XR products in their lifetime. Much like someone who doesn’t have a laptop and a phone, but has a laptop and a phone.”

This of course means even lighter and thinner glasses and generally cheaper and more readily available smart frames, and there will be an ecosystem and apps compatible with smart glasses. Payne is excited about the future and sees a parallel with at least one other technological era.

“We think this is actually a very early area, and the story of it hasn’t been written yet. So it’s good to see that there’s momentum, it’s good to see that there’s some traction. But to put it in context, there’s no glasses with an app ecosystem yet. In that way, it’s almost like the launch of the iPhone, and it was a very short time that we still weren’t there.