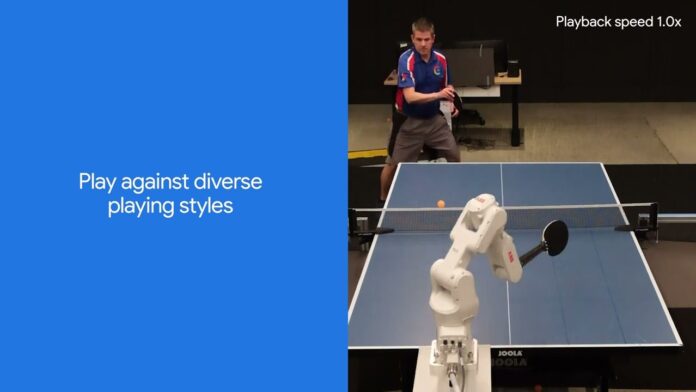

Google has announced that its DeepMind AI can now play table tennis at the level of an amateur competitor. The breakthrough is believed to be a major leap in the continuous development of AI toward the goal of making machines capable of mastering quite complicated real-world tasks.

A Big Achievement in AI Development

DeepMind is the Google AI research laboratory leading in developments related to artificial intelligence. The company’s researchers have been working on different innovative projects, from enhancing audio in silent videos to discovering new materials, and now they have developed a robot that plays table tennis similarly good as humans.

The researchers at Google disclosed in a paper published recently that their AI-powered robot emerged victorious in 13 of the 29 matches it had with human players. The degree of success differed concerning proficiency in different human players it faced, from beginners to advanced. It is, the researchers pointed out, the first time a robot agent has played any sport at the human level, so it represents a breakthrough in both robot learning and control. They pointed out, however, that it’s only a very small advance toward a much greater goal of developing robots that perform useful, real-world skills.

Why Table Tennis?

The DeepMind team chose table tennis for their project because of the complexity of the sport itself. Table tennis comprises intricate motion physics with precise hand-eye coordination and strategic decision-making. Training an AI to overcome these elements all at once was quite a challenge; hence, its achievement is an achievement in itself.

The training process involved focusing on each specific type of shot individually, such as backhand spins and forehand serves. A high-level algorithm was then fitted into AI, meant to choose the appropriate shot in real-time during a match. At the end of this rigorous training, the robot still could not handle faster shots which gave it less time to decide on the next move to make.

The Future Lies Ahead

Though today’s performance is no doubt impressive on its own, the DeepMind team is looking beyond that. They are now working on ways to make the system less predictable, which would make the AI more versatile and thus harder to play. On the other hand, built-in AI is the ability to learn from human opponents by analyzing their playing strategies and, in the long run, adapting to their strengths and weaknesses.

This development does not simply show the advance in AI training and scaling; it has opened new possibilities for how robots might eventually help with real-world physical tasks. Applications of that kind of technology are massive in everyday life; it broadens with the improvement of AI.

The fact that a Google DeepMind AI is now able to play table tennis at a competitive level is proof that the phenomenal growth in artificial intelligence is not a myth. Feats like this will soon be very common in the sphere of AI once researchers continue to better these systems.