Samsung’s latest memory innovation, dubbed Shinebolt, sets a new standard. Boasting speeds of 9.8Gb/s (1.2TB/s), it outpaces its forerunner by over 50%. This technological leap is in response to the growing demands of cloud computing.

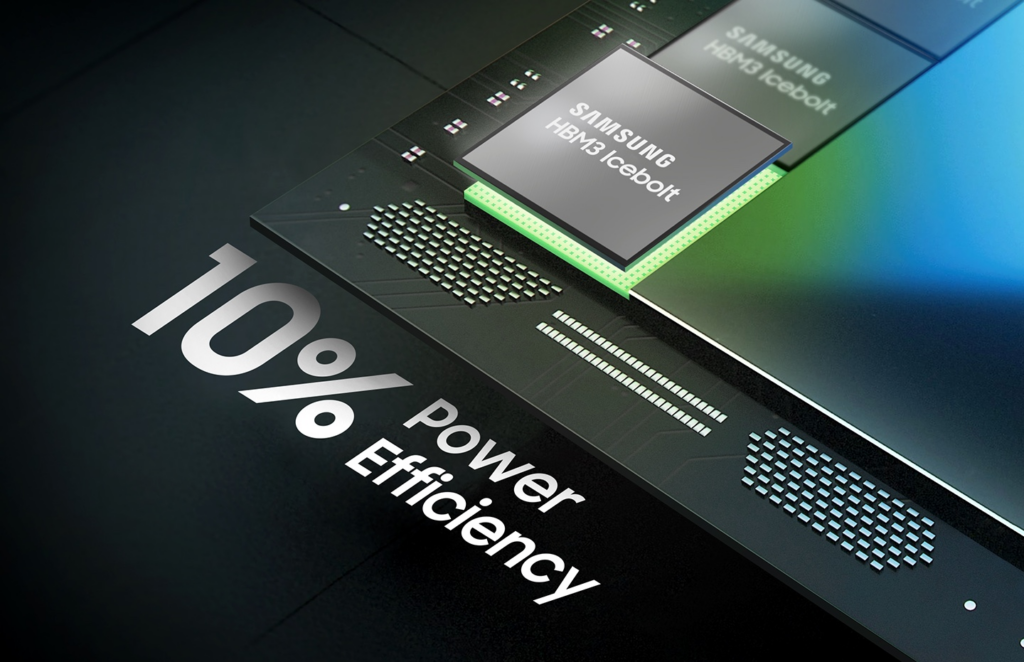

Shinebolt succeeds Icebolt, which reaches 6.4Gb/s, offered in up to 32GB variants. Ideal for top-tier GPUs in AI processing and LLMs, Samsung is increasing production to meet industry growth.

Empowering AI’s Future: HBM3E Integration

Nvidia and HBM3E, a perfect match with a recent partnership, envision HBM3E in the GH200, codenamed Grace Hopper.

Compared to standard RAM, High-bandwidth memory (HBM) excels in speed and energy efficiency, thanks to 3D stacking technology.

Samsung elevates HBM3E further by using non-conductive film (NCF) tech to maximize thermal conductivity and boost speed and efficiency.

This innovation promises to accelerate AI training, enhance inference, and reduce the total cost of ownership (TCO) for data centers.

Notably, Samsung’s HBM3E may be integrated into Nvidia’s upcoming AI chip, the H200, as part of their agreement. Samsung is set to supply around 30% of Nvidia’s memory by 2024.

The ongoing partnership suggests that HBM3E could play a pivotal role as mass production takes off.