- Huawei combines thousands of NPUs to demonstrate its superiority in supercomputing

- Nvidia delivers cutting-edge, balanced and proven AI performance that businesses trust.

- AMD Demonstrates Radical Network Design That Extends Scalability to New Areas

The race to build the most powerful AI supercomputing systems is heating up, with major brands now racing to build flagship clusters to prove they can handle the next generation of multi-billion-dollar parameter models and data-intensive research.

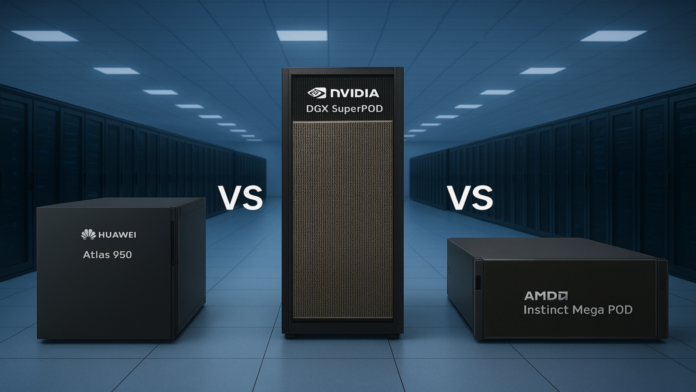

Huawei’s recently released Atlas 950 SuperPod, Nvidia’s DGX SuperPod, and AMD’s upcoming Instinct MegaPod are different approaches to the same problem.

The goal of all of these elements is to provide large-scale compute, memory, and bandwidth in a scalable package to support artificial intelligence tools for generative modeling, drug discovery, autonomous systems, and data-driven science. But how do they compare?

Philosophy for each system.

What makes these systems so attractive is that they reflect the strategies of their creators.

Huawei Ascend relies heavily on the 950 chipset and a special connection called UnifiedBus 2.0. The focus is on creating hyperscale computing densities and connecting them seamlessly.

Nvidia has spent several years perfecting its DGX product line and now offers DGX SuperPod as a turnkey solution that integrates GPU, CPU, networking and storage in a balanced environment for enterprises and research institutions.

AMD is preparing to join the conversation with Instinct MegaPod, aiming to expand its product line with future Mi500 accelerators and a new mesh called UELink.

Huawei now talks about exascale performance, NVIDIA emphasizes stable and proven platforms, and AMD is positioning itself as a competitor that offers better future scalability.

At the heart of these clusters are powerful processors designed to deliver massive computing power and handle demanding AI and high-performance computing workloads.

Huawei’s Atlas 950 SuperPOD 8192 is based on the Ascend 950 NPU, with a maximum performance of 8 exaflops at FP8 and 16 exaflops at FP16. Therefore, it is designed specifically for large-scale training and inference.

Nvidia’s DGX SuperPod is based on the DGX A100 contract and offers differentiated performance. Judging by the number of chips, it seems small, with 20 nodes totaling 160 A100 GPUs.

However, each GPU is optimized for mixed-precision AI workloads and, when combined with high-speed InfiniBand, offers low latency.

AMD’s MegaPod is still in development, but early indications are that it will have 256 Instinct Mi500 GPUs and 64 Zen 7 “Summer” processors.

Initial compute numbers have not yet been released, but AMD aims to match or surpass Nvidia’s efficiency and scale, especially as it uses next-generation PCIe Gen 6 and 3nm network ASICs.

Serving data to thousands of accelerators requires significant amounts of memory and connection speed.

Huawei claims that the Atlas 950 SuperPOD has over 1PB of memory and a total system throughput of 16.3PB per second.

This type of performance is designed to allow data transfer between NPU racks without interruption.

Nvidia’s DGX SuperPod doesn’t try to match those numbers, instead relying on 52.5TB of system memory and 49TB of high-bandwidth GPU memory, along with 200Gbps InfiniBand links per node.

The focus here is on the expected performance of workloads that the organization is already running.

Meanwhile, AMD is looking to break through with its Vulcano switch ASIC, which offers 102.4 Tbps of per-chassis throughput and 800 Gbps of external throughput.

The combination of UALink and Ultra Ethernet suggests the system will launch in 2027, pushing the boundaries of existing networks.

The biggest difference between the three candidates is their physical condition.

Huawei’s design allows the system to scale from a single SuperPod to 500,000 Ascend chips in a supercluster.

Others say an Atlas 950 configuration could include more than 100 cabinets spread over a 1,000-square-meter area.

Nvidia’s DGX SuperPod takes a more compact approach, unifying 20 nodes into a clustered structure that businesses can deploy without stadium-sized data rooms.

AMD’s MegaPod eliminates the difference between a two-computer tray rack and a dedicated network rack, demonstrating that its architecture is based on a modular yet robust design.

In terms of launch, Nvidia’s DGX SuperPod is already on the market, Huawei’s Atlas 950 SuperPod is expected to be available in late 2026, and AMD’s MegaPod is expected to be available in 2027.

But these chips are fighting very different battles under the same banner for AI supercomputer supremacy.

Huawei’s Atlas 950 SuperPOD has demonstrated its ability to accommodate thousands of NPUs and impressive performance for large-scale management, but its size and unique design may make it difficult for outsiders to adopt.

Nvidia’s DGX SuperPod looks small on paper, but stands out for its sophistication and reliability, providing a proven platform that businesses and research institutions can connect to today without expecting any commitment.

AMD’s MegaPod is still in development, but it already has the potential of the Mi 500’s accelerator and a revolutionary new grille design that may change things when it arrives, but until then it’s a questionable contender.

via Huawei Nvidia, The power of technology

you may also like

- Here are the best portable workstations available today

- We’ve also listed the best monitors for every budget and resolution.

- AI > Crypto: Bitcoin mining startup receives $700 million investment from Nvidia